How To Argue Against AI-First Research

How To Argue Against AI-First Research How To Argue Against AI-First Research Vitaly Friedman 2025-03-28T09:00:00+00:00 2025-04-01T21:33:18+00:00 With AI upon us, companies have recently been turning their attention to “synthetic” user testing — AI-driven research that replaces UX research. There, questions are answered by AI-generated “customers,” […]

Ux

How to Put Your WordPress Site in Maintenance Mode

There are times when you need to temporarily take your WordPress site offline, whether for updates, troubleshooting, or redesigns. Instead of displaying a broken or unfinished site, maintenance mode allows you to show visitors a professional message while you work behind the scenes. Unlike a […]

Wordpress

How To Build Confidence In Your UX Work

How To Build Confidence In Your UX Work How To Build Confidence In Your UX Work Vitaly Friedman 2025-03-11T15:00:00+00:00 2025-03-11T22:04:08+00:00 When I start any UX project, typically, there is very little confidence in the successful outcome of my UX initiatives. In fact, there is quite […]

Ux

How To Argue Against AI-First Research

How To Argue Against AI-First Research How To Argue Against AI-First Research Vitaly Friedman 2025-03-28T09:00:00+00:00 2025-04-01T21:33:18+00:00 With AI upon us, companies have recently been turning their attention to “synthetic” user testing — AI-driven research that replaces UX research. There, questions are answered by AI-generated “customers,” […]

Ux

How To Argue Against AI-First Research

Vitaly Friedman 2025-03-28T09:00:00+00:00

2025-04-01T21:33:18+00:00

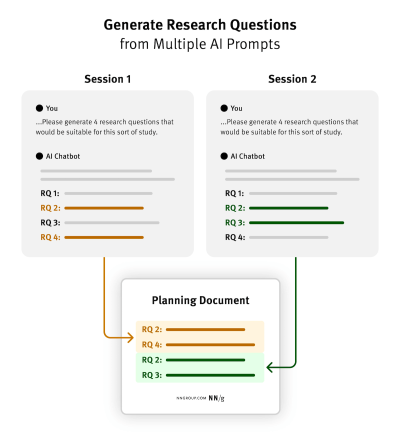

With AI upon us, companies have recently been turning their attention to “synthetic” user testing — AI-driven research that replaces UX research. There, questions are answered by AI-generated “customers,” human tasks “performed” by AI agents.

However, it’s not just for desk research or discovery that AI is used for; it’s an actual usability testing with “AI personas” that mimic human behavior of actual customers within the actual product. It’s like UX research, just… well, without the users.

.course-intro{–shadow-color:206deg 31% 60%;background-color:#eaf6ff;border:1px solid #ecf4ff;box-shadow:0 .5px .6px hsl(var(–shadow-color) / .36),0 1.7px 1.9px -.8px hsl(var(–shadow-color) / .36),0 4.2px 4.7px -1.7px hsl(var(–shadow-color) / .36),.1px 10.3px 11.6px -2.5px hsl(var(–shadow-color) / .36);border-radius:11px;padding:1.35rem 1.65rem}@media (prefers-color-scheme:dark){.course-intro{–shadow-color:199deg 63% 6%;border-color:var(–block-separator-color,#244654);background-color:var(–accent-box-color,#19313c)}}

If this sounds worrying, confusing, and outlandish, it is — but this doesn’t stop companies from adopting AI “research” to drive business decisions. Although, unsurprisingly, the undertaking can be dangerous, risky, and expensive and usually diminishes user value.

This article is part of our ongoing series on UX. You can find more details on design patterns and UX strategy in Smart Interface Design Patterns 🍣 — with live UX training coming up soon. Free preview.

Fast, Cheap, Easy… And Imaginary

Erika Hall famously noted that “design is only as ‘human-centered’ as the business model allows.” If a company is heavily driven by hunches, assumptions, and strong opinions, there will be little to no interest in properly-done UX research in the first place.

But unlike UX research, AI research (conveniently called synthetic testing) is fast, cheap, and easy to re-run. It doesn’t raise uncomfortable questions, and it doesn’t flag wrong assumptions. It doesn’t require user recruitment, much time, or long-winded debates.

And: it can manage thousands of AI personas at once. By studying AI-generated output, we can discover common journeys, navigation patterns, and common expectations. We can anticipate how people behave and what they would do.

Well, that’s the big promise. And that’s where we start running into big problems.

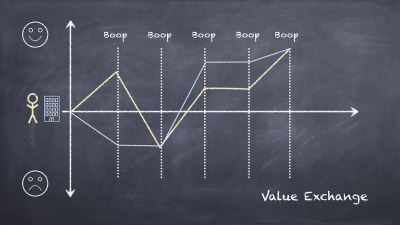

LLMs Are People Pleasers

Good UX research has roots in what actually happened, not what might have happened or what might happen in the future.

By nature, LLMs are trained to provide the most “plausible” or most likely output based on patterns captured in its training data. These patterns, however, emerge from expected behaviors by statistically “average” profiles extracted from content on the web. But these people don’t exist, they never have.

By default, user segments are not scoped and not curated. They don’t represent the customer base of any product. So to be useful, we must eloquently prompt AI by explaining who users are, what they do, and how they behave. Otherwise, the output won’t match user needs and won’t apply to our users.

When “producing” user insights, LLMs can’t generate unexpected things beyond what we’re already asking about.

In comparison, researchers are only able to define what’s relevant as the process unfolds. In actual user testing, insights can help shift priorities or radically reimagine the problem we’re trying to solve, as well as potential business outcomes.

Real insights come from unexpected behavior, from reading behavioral clues and emotions, from observing a person doing the opposite of what they said. We can’t replicate it with LLMs.

AI User Research Isn’t “Better Than Nothing”

Pavel Samsonov articulates that things that sound like customers might say them are worthless. But things that customers actually have said, done, or experienced carry inherent value (although they could be exaggerated). We just need to interpret them correctly.

AI user research isn’t “better than nothing” or “more effective.” It creates an illusion of customer experiences that never happened and are at best good guesses but at worst misleading and non-applicable. Relying on AI-generated “insights” alone isn’t much different than reading tea leaves.

The Cost Of Mechanical Decisions

We often hear about the breakthrough of automation and knowledge generation with AI. Yet we often forget that automation often comes at a cost: the cost of mechanical decisions that are typically indiscriminate, favor uniformity, and erode quality.

As Maria Rosala and Kate Moran write, the problem with AI research is that it most certainly will be misrepresentative, and without real research, you won’t catch and correct those inaccuracies. Making decisions without talking to real customers is dangerous, harmful, and expensive.

Beyond that, synthetic testing assumes that people fit in well-defined boxes, which is rarely true. Human behavior is shaped by our experiences, situations, habits that can’t be replicated by text generation alone. AI strengthens biases, supports hunches, and amplifies stereotypes.

Triangulate Insights Instead Of Verifying Them

Of course AI can provide useful starting points to explore early in the process. But inherently it also invites false impressions and unverified conclusions — presented with an incredible level of confidence and certainty.

Starting with human research conducted with real customers using a real product is just much more reliable. After doing so, we can still apply AI to see if we perhaps missed something critical in user interviews. AI can enhance but not replace UX research.

Also, when we do use AI for desk research, it can be tempting to try to “validate” AI “insights” with actual user testing. However, once we plant a seed of insight in our head, it’s easy to recognize its signs everywhere — even if it really isn’t there.

Instead, we study actual customers, then triangulate data: track clusters or most heavily trafficked parts of the product. It might be that analytics and AI desk research confirm your hypothesis. That would give you a much stronger standing to move forward in the process.

Wrapping Up

I might sound like a broken record, but I keep wondering why we feel the urgency to replace UX work with automated AI tools. Good design requires a good amount of critical thinking, observation, and planning.

To me personally, cleaning up after AI-generated output takes way more time than doing the actual work. There is an incredible value in talking to people who actually use your product.

I would always choose one day with a real customer instead of one hour with 1,000 synthetic users pretending to be humans.

Useful Resources

- Synthetic Users, by Maria Rosala, Kate Moran

- Synthetic Users: The Next Revolution in UX Research?, by Carolina Guimarães

- AI Users Are Neither AI Nor Users, by Debbie Levitt

- Planning Research with Generative AI, by Maria Rosala

- Synthetic Testing, by Stéphanie Walter, Nikki Anderson, MA

- The Dark Side of Synthetic AI Research, by Greg Nudelman

New: How To Measure UX And Design Impact

Meet Measure UX & Design Impact (8h), a new practical guide for designers and UX leads to measure and show your UX impact on business. Use the code 🎟 IMPACT to save 20% off today. Jump to the details.

Video + UX Training

$ 495.00 $ 799.00

Get Video + UX Training

25 video lessons (8h) + Live UX Training.

100 days money-back-guarantee.

Video only

25 video lessons (8h). Updated yearly.

Also available as a UX Bundle with 2 video courses.

(cm)

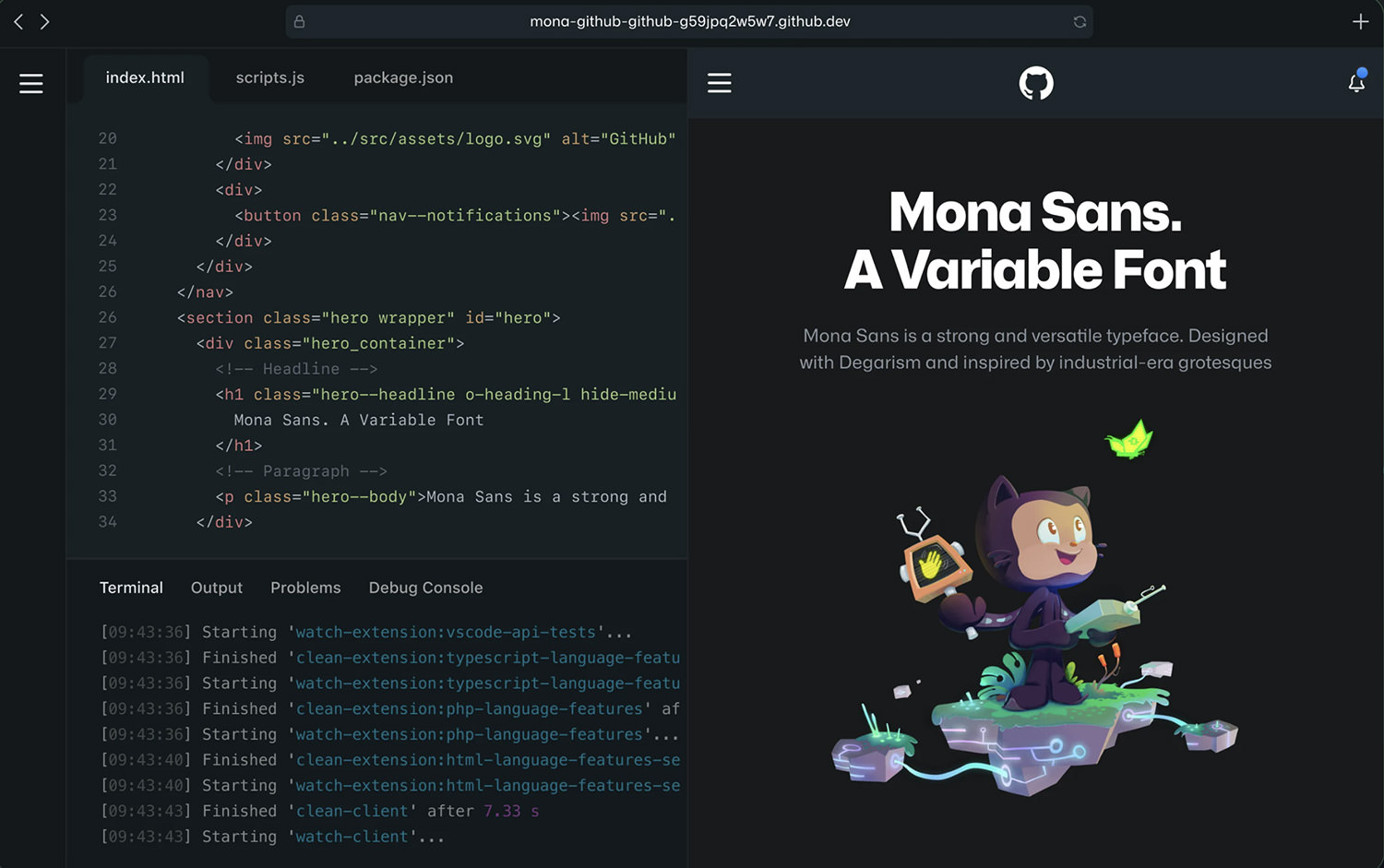

How to Put Your WordPress Site in Maintenance Mode

There are times when you need to temporarily take your WordPress site offline, whether for updates, troubleshooting, or redesigns. Instead of displaying a broken or unfinished site, maintenance mode allows you to show visitors a professional message while you work behind the scenes. Unlike a […]

WordpressThere are times when you need to temporarily take your WordPress site offline, whether for updates, troubleshooting, or redesigns. Instead of displaying a broken or unfinished site, maintenance mode allows you to show visitors a professional message while you work behind the scenes.

Unlike a regular page, a maintenance page uses the 503 standard HTTP status code, which tells search engines the downtime is temporary and prevents SEO penalties.

In this article, we’ll walk you through a couple of different ways to implement this maintenance page. Let’s check it out.

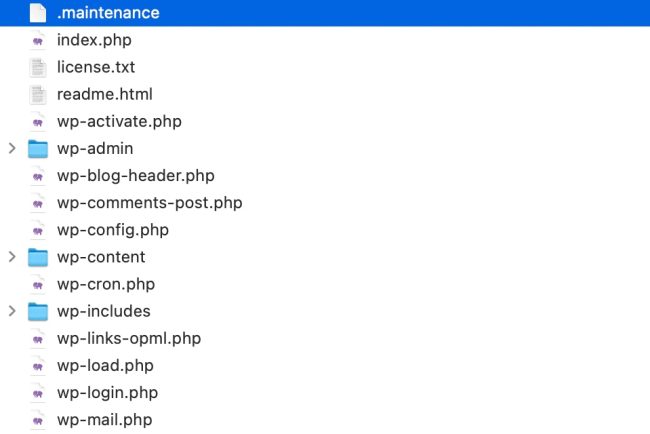

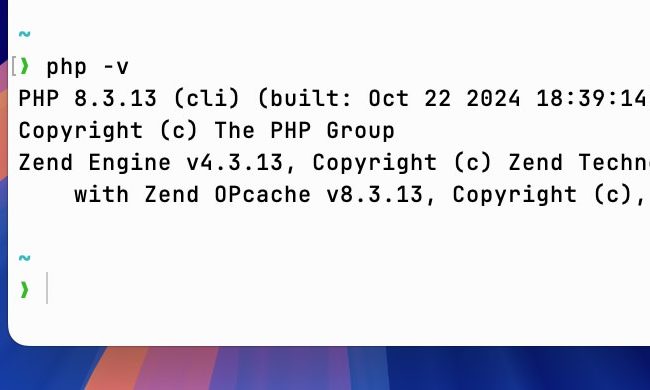

Use Built-in Maintenance File

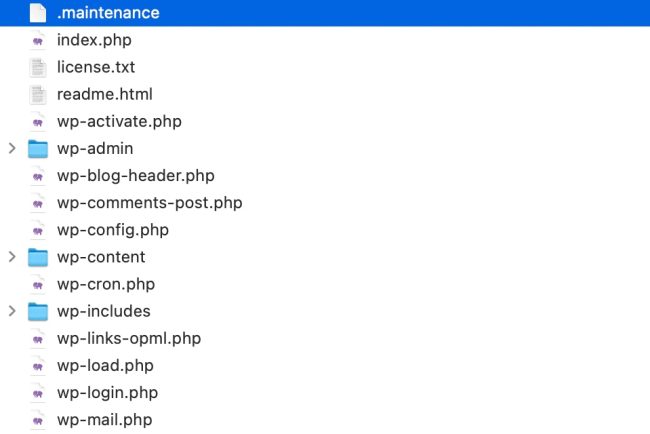

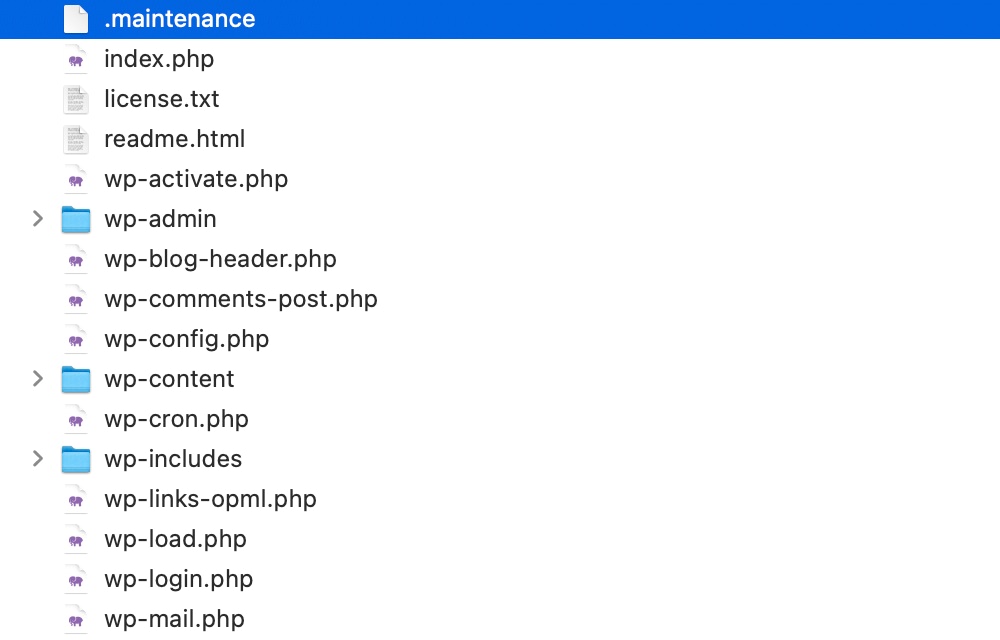

WordPress has a built-in maintenance mode that activates when you update your site. It creates a .maintenance file in the root directory of your site. This file contains the message you want to show visitors and the time the maintenance mode was activated. Likewise, you could create a .maintenance file manually to put your site in maintenance mode.

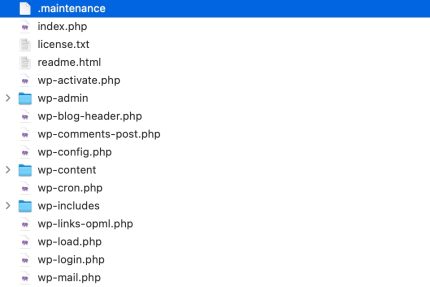

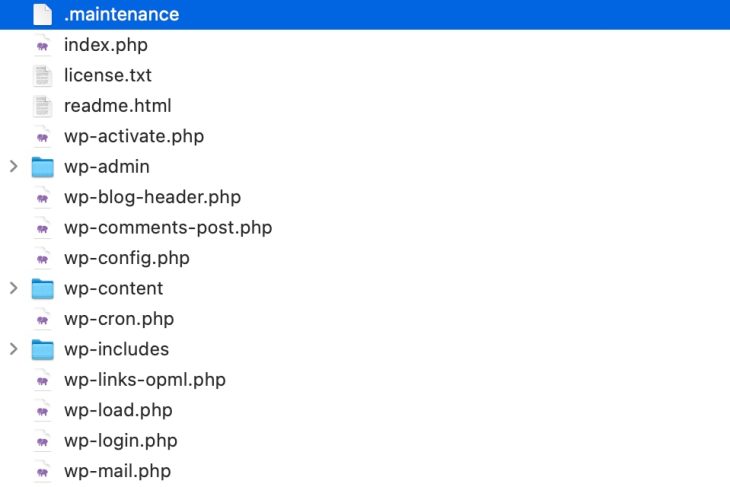

You can create the file, .maintenance, at the root of your WordPress site installation where the wp-config.php file resides, as you can see below:

Then put this code below within the file:

<?php $upgrading = time(); ?>

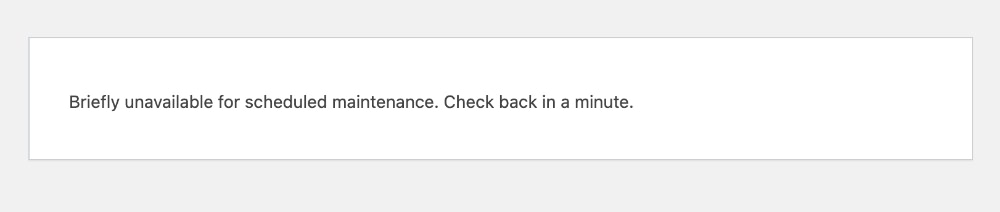

This will immediately activate WordPress maintenance mode. If you call the built-in wp_is_maintenance_mode function, it should return true, confirming that maintenance mode is active. When you reload the page, WordPress will display the default maintenance message.

Customizing the Maintenance Page

The default maintenance message is simple and plain.

You can customize it by creating a custom maintenance page named maintenance.php within the wp-content directory. You can add a custom message and styles to the page to make it more appealing to visitors or make it fit better with your overall site design.

Here is an example code you can put within the file:

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<title>Maintenance</title>

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/normalize/8.0.1/normalize.min.css">

<style>

#container {

display: flex;

width: 100vw;

height: 100vh;

padding-inline: 20vw;

box-sizing: border-box;

text-align: center;

justify-content: center;

align-items: center;

flex-flow: column wrap;

}

</style>

</head>

<body>

<div id="container">

<h1>Maintenance</h1>

<p>Our website is currently undergoing scheduled maintenance. We should be back shortly. Thank you for your patience.</p>

</div>

</body>

</html>

Now, when you reload your site, you should see the entire page rendered with the updated content and styles from this maintenance.php file.

The problem with this file is that you cannot use WordPress functions such as wp_head, wp_title, wp_footer, esc_html_e, etc. This means that you cannot display the site title, enqueue assets like stylesheets and JavaScript files, or any other dynamic content rendered using WordPress functions within this file.

That’s why, as you can see above, we’ve only added static content and linked stylesheets statically as well.

This leads to additional problems, such as the inability to translate content on the maintenance page. And since stylesheets can’t be dynamically enqueued, the maintenance page design may feel out of place if you change your theme.

Use a Plugin

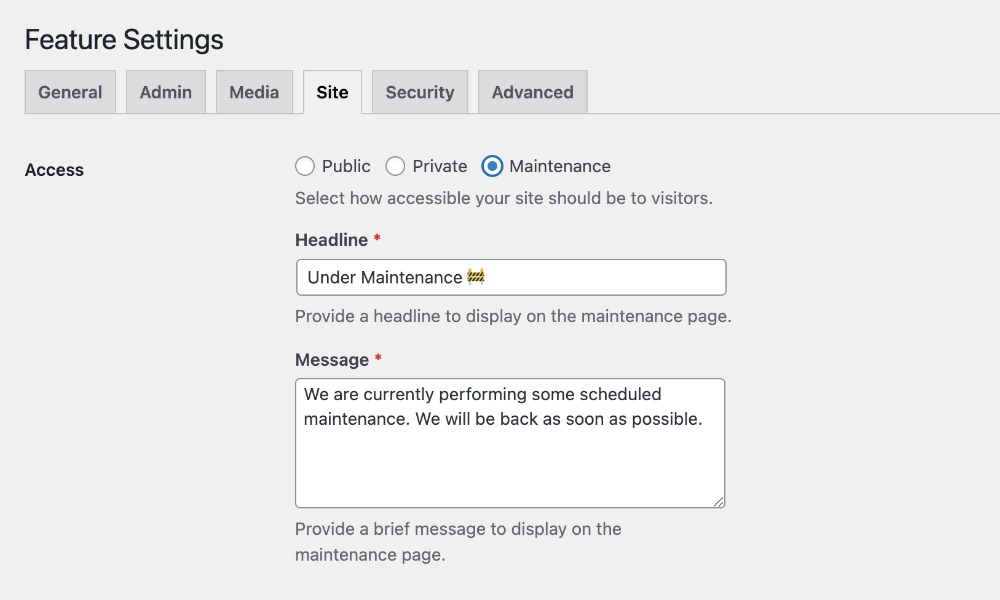

The easiest way to enable maintenance mode on your WordPress site is by using a plugin. There are several options available, but in this article, we’ll use Feature Flipper. This plugin includes various utilities, one of which allows you to activate maintenance mode easily.

After installing and activating the plugin, navigate to Settings > Features > Site and enable the “Maintenance” option. As we can see below, you can also customize the maintenance page content to match your needs.

The maintenance page automatically inherits styles from your active theme to ensure that the page seamlessly matches your theme’s styles. If you change your theme, the maintenance page will adapt to the new styles accordingly.

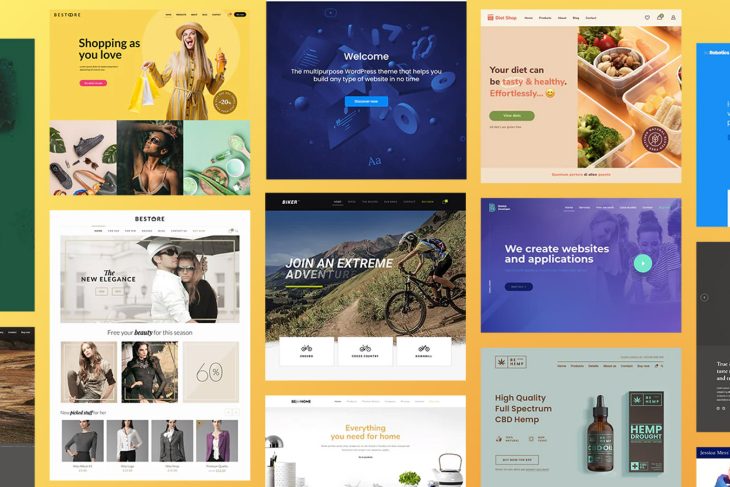

Here’s how it looks with some of the popular themes from the WordPress.org repository:

TwentyTwentyFive

TwentyTwentyFour

TwentyTwentyOne

Wrapping Up

In this article, we explored two ways to enable maintenance mode on your WordPress site. The built-in method is quick and easy but lacks customization. Meanwhile, a plugin offers more flexibility and makes it easy to toggle maintenance mode on and off directly from the dashboard.

No matter which method you choose, don’t forget to disable maintenance mode once you’re done so your site remains accessible!

The post How to Put Your WordPress Site in Maintenance Mode appeared first on Hongkiat.

How To Build Confidence In Your UX Work

How To Build Confidence In Your UX Work How To Build Confidence In Your UX Work Vitaly Friedman 2025-03-11T15:00:00+00:00 2025-03-11T22:04:08+00:00 When I start any UX project, typically, there is very little confidence in the successful outcome of my UX initiatives. In fact, there is quite […]

Ux

How To Build Confidence In Your UX Work

Vitaly Friedman 2025-03-11T15:00:00+00:00

2025-03-11T22:04:08+00:00

When I start any UX project, typically, there is very little confidence in the successful outcome of my UX initiatives. In fact, there is quite a lot of reluctance and hesitation, especially from teams that have been burnt by empty promises and poor delivery in the past.

Good UX has a huge impact on business. But often, we need to build up confidence in our upcoming UX projects. For me, an effective way to do that is to address critical bottlenecks and uncover hidden deficiencies — the ones that affect the people I’ll be working with.

Let’s take a closer look at what this can look like.

.course-intro{–shadow-color:206deg 31% 60%;background-color:#eaf6ff;border:1px solid #ecf4ff;box-shadow:0 .5px .6px hsl(var(–shadow-color) / .36),0 1.7px 1.9px -.8px hsl(var(–shadow-color) / .36),0 4.2px 4.7px -1.7px hsl(var(–shadow-color) / .36),.1px 10.3px 11.6px -2.5px hsl(var(–shadow-color) / .36);border-radius:11px;padding:1.35rem 1.65rem}@media (prefers-color-scheme:dark){.course-intro{–shadow-color:199deg 63% 6%;border-color:var(–block-separator-color,#244654);background-color:var(–accent-box-color,#19313c)}}

This article is part of our ongoing series on UX. You can find more details on design patterns and UX strategy in Smart Interface Design Patterns 🍣 — with live UX training coming up soon. Free preview.

UX Doesn’t Disrupt, It Solves Problems

Bottlenecks are usually the most disruptive part of any company. Almost every team, every unit, and every department has one. It’s often well-known by employees as they complain about it, but it rarely finds its way to senior management as they are detached from daily operations.

The bottleneck can be the only senior developer on the team, a broken legacy tool, or a confusing flow that throws errors left and right — there’s always a bottleneck, and it’s usually the reason for long waiting times, delayed delivery, and cutting corners in all the wrong places.

We might not be able to fix the bottleneck. But for a smooth flow of work, we need to ensure that non-constraint resources don’t produce more than the constraint can handle. All processes and initiatives must be aligned to support and maximize the efficiency of the constraint.

So before doing any UX work, look out for things that slow down the organization. Show that it’s not UX work that disrupts work, but it’s internal disruptions that UX can help with. And once you’ve delivered even a tiny bit of value, you might be surprised how quickly people will want to see more of what you have in store for them.

The Work Is Never Just “The Work”

Meetings, reviews, experimentation, pitching, deployment, support, updates, fixes — unplanned work blocks other work from being completed. Exposing the root causes of unplanned work and finding critical bottlenecks that slow down delivery is not only the first step we need to take when we want to improve existing workflows, but it is also a good starting point for showing the value of UX.

To learn more about the points that create friction in people’s day-to-day work, set up 1:1s with the team and ask them what slows them down. Find a problem that affects everyone. Perhaps too much work in progress results in late delivery and low quality? Or lengthy meetings stealing precious time?

One frequently overlooked detail is that we can’t manage work that is invisible. That’s why it is so important that we visualize the work first. Once we know the bottleneck, we can suggest ways to improve it. It could be to introduce 20% idle times if the workload is too high, for example, or to make meetings slightly shorter to make room for other work.

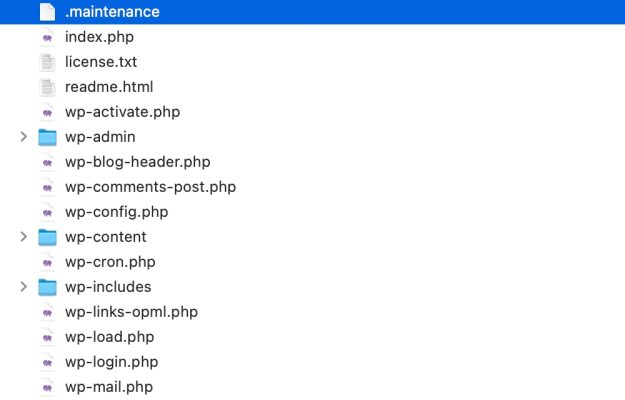

The Theory Of Constraints

The idea that the work is never just “the work” is deeply connected to the Theory of Constraints discovered by Dr. Eliyahu M. Goldratt. It showed that any improvements made anywhere beside the bottleneck are an illusion.

Any improvement after the bottleneck is useless because it will always remain starved, waiting for work from the bottleneck. And any improvements made before the bottleneck result in more work piling up at the bottleneck.

Wait Time = Busy ÷ Idle

To improve flow, sometimes we need to freeze the work and bring focus to one single project. Just as important as throttling the release of work is managing the handoffs. The wait time for a given resource is the percentage of time that the resource is busy divided by the percentage of time it’s idle. If a resource is 50% utilized, the wait time is 50/50, or 1 unit.

If the resource is 90% utilized, the wait time is 90/10, or 9 times longer. And if it’s 99% of time utilized, it’s 99/1, so 99 times longer than if that resource is 50% utilized. The critical part is to make wait times visible so you know when your work spends days sitting in someone’s queue.

The exact times don’t matter, but if a resource is busy 99% of the time, the wait time will explode.

Avoid 100% Occupation

Our goal is to maximize flow: that means exploiting the constraint but creating idle times for non-constraint to optimize system performance.

One surprising finding for me was that any attempt to maximize the utilization of all resources — 100% occupation across all departments — can actually be counterproductive. As Goldratt noted, “An hour lost at a bottleneck is an hour out of the entire system. An hour saved at a non-bottleneck is worthless.”

Recommended Read: “The Phoenix Project”

I can only wholeheartedly recommend The Phoenix Project, an absolutely incredible book that goes into all the fine details of the Theory of Constraints described above.

It’s not a design book but a great book for designers who want to be more strategic about their work. It’s a delightful and very real read about the struggles of shipping (albeit on a more technical side).

Wrapping Up

People don’t like sudden changes and uncertainty, and UX work often disrupts their usual ways of working. Unsurprisingly, most people tend to block it by default. So before we introduce big changes, we need to get their support for our UX initiatives.

We need to build confidence and show them the value that UX work can have — for their day-to-day work. To achieve that, we can work together with them. Listening to the pain points they encounter in their workflows, to the things that slow them down.

Once we’ve uncovered internal disruptions, we can tackle these critical bottlenecks and suggest steps to make existing workflows more efficient. That’s the foundation to gaining their trust and showing them that UX work doesn’t disrupt but that it’s here to solve problems.

New: How To Measure UX And Design Impact

Meet Measure UX & Design Impact (8h), a practical guide for designers and UX leads to measure and show your UX impact on business. Watch the free preview or jump to the details.

Video + UX Training

$ 495.00 $ 799.00

Get Video + UX Training

25 video lessons (8h) + Live UX Training.

100 days money-back-guarantee.

Video only

25 video lessons (8h). Updated yearly.

Also available as a UX Bundle with 2 video courses.

(cm)

The Human Element: Using Research And Psychology To Elevate Data Storytelling

The Human Element: Using Research And Psychology To Elevate Data Storytelling The Human Element: Using Research And Psychology To Elevate Data Storytelling Victor Yocco & Angelica Lo Duca 2025-02-26T10:00:00+00:00 2025-03-04T21:34:45+00:00 Data storytelling is a powerful communication tool that combines data analysis with narrative techniques to […]

Ux

The Human Element: Using Research And Psychology To Elevate Data Storytelling

Victor Yocco & Angelica Lo Duca 2025-02-26T10:00:00+00:00

2025-03-04T21:34:45+00:00

Data storytelling is a powerful communication tool that combines data analysis with narrative techniques to create impactful stories. It goes beyond presenting raw numbers by transforming complex data into meaningful insights that can drive decisions, influence behavior, and spark action.

When done right, data storytelling simplifies complex information, engages the audience, and compels them to act. Effective data storytelling allows UX professionals to effectively communicate the “why” behind their design choices, advocate for user-centered improvements, and ultimately create more impactful and persuasive presentations. This translates to stronger buy-in for research initiatives, increased alignment across teams, and, ultimately, products and experiences that truly meet user needs.

For instance, The New York Times’ Snow Fall data story (Figure 1) used data to immerse readers in the tale of a deadly avalanche through interactive visuals and text, while The Guardian’s The Counted (Figure 2) powerfully illustrated police violence in the U.S. by humanizing data through storytelling. These examples show that effective data storytelling can leave lasting impressions, prompting readers to think differently, act, or make informed decisions.

The importance of data storytelling lies in its ability to:

- Simplify complexity

It makes data understandable and actionable. - Engage and persuade

Emotional and cognitive engagement ensures audiences not only understand but also feel compelled to act. - Bridge gaps

Data storytelling connects the dots between information and human experience, making the data relevant and relatable.

While there are numerous models of data storytelling, here are a few high-level areas of focus UX practitioners should have a grasp on:

Narrative Structures: Traditional storytelling models like the hero’s journey (Vogler, 1992) or the Freytag pyramid (Figure 3) provide a backbone for structuring data stories. These models help create a beginning, rising action, climax, falling action, and resolution, keeping the audience engaged.

Data Visualization: Broadly speaking, these are the tools and techniques for visualizing data in our stories. Interactive charts, maps, and infographics (Cairo, 2016) transform raw data into digestible visuals, making complex information easier to understand and remember.

Narrative Structures For Data

Moving beyond these basic structures, let’s explore how more sophisticated narrative techniques can enhance the impact of data stories:

- The Three-Act Structure

This approach divides the data story into setup, confrontation, and resolution. It helps build context, present the problem or insight, and offer a solution or conclusion (Few, 2005). - The Hero’s Journey (Data Edition)

We can frame a data set as a problem that needs a hero to overcome. In this case, the hero is often the audience or the decision-maker who needs to use the data to solve a problem. The data itself becomes the journey, revealing challenges, insights, and, ultimately, a path to resolution.

Example:

Presenting data on declining user engagement could follow the hero’s journey. The “call to adventure” is the declining engagement. The “challenges” are revealed through data points showing where users are dropping off. The “insights” are uncovered through further analysis, revealing the root causes. The “resolution” is the proposed solution, supported by data, that the audience (the hero) can implement.

Problems With Widely Used Data Storytelling Models

Many data storytelling models follow a traditional, linear structure: data selection, audience tailoring, storyboarding with visuals, and a call to action. While these models aim to make data more accessible, they often fail to engage the audience on a deeper level, leading to missed opportunities. This happens because they prioritize the presentation of data over the experience of the audience, neglecting how different individuals perceive and process information.

While existing data storytelling models adhere to a structured and technically correct approach to data creation, they often fall short of fully analyzing and understanding their audience. This gap weakens their overall effectiveness and impact.

- Cognitive Overload

Presenting too much data without context or a clear narrative overwhelms the audience. Instead of enlightenment, they experience confusion and disengagement. It’s like trying to drink from a firehose; the sheer volume becomes counterproductive. This overload can be particularly challenging for individuals with cognitive differences who may require information to be presented in smaller, more digestible chunks. - Emotional Disconnect

Data-heavy presentations often fail to establish an emotional connection, which is crucial for driving audience engagement and action. People are more likely to remember and act upon information that resonates with their feelings and values. - Lack of Personalization

Many data stories adopt a one-size-fits-all approach. Without tailoring the narrative to specific audience segments, the impact is diluted. A message that resonates with a CEO might not land with frontline employees. - Over-Reliance on Visuals

While visuals are essential for simplifying data, they are insufficient without a cohesive narrative to provide context and meaning, and they may not be accessible to all audience members.

These shortcomings reveal a critical flaw: while current models successfully follow a structured data creation process, they often neglect the deeper, audience-centered analysis required for actual storytelling effectiveness. To bridge this gap,

Data storytelling must evolve beyond simply presenting information — it should prioritize audience understanding, engagement, and accessibility at every stage.

“

Improving On Traditional Models

Traditional models can be improved by focusing more on the following two critical components:

Audience understanding: A greater focus can be concentrated on who the audience is, what they need, and how they perceive information. Traditional models should consider the unique characteristics and needs of specific audiences. This lack of audience understanding can lead to data stories that are irrelevant, confusing, or even misleading.

Effective data storytelling requires a deep understanding of the audience’s demographics, psychographics, and information needs. This includes understanding their level of knowledge about the topic, their prior beliefs and attitudes, and their motivations for seeking information. By tailoring the data story to a specific audience, storytellers can increase engagement, comprehension, and persuasion.

Psychological principles: These models could be improved with insights from psychology that explain how people process information and make decisions. Without these elements, even the most beautifully designed data story may fall flat. Traditional models of data storytelling can be improved with two critical components that are essential for creating impactful and persuasive narratives: audience understanding and psychological principles.

By incorporating audience understanding and psychological principles into their storytelling process, data storytellers can create more effective and engaging narratives that resonate with their audience and drive desired outcomes.

Persuasion In Data Storytelling

All storytelling involves persuasion. Even if it’s a poorly told story and your audience chooses to ignore your message, you’ve persuaded them to do that. When your audience feels that you understand them, they are more likely to be persuaded by your message. Data-driven stories that speak to their hearts and minds are more likely to drive action. You can frame your message effectively when you have a deeper understanding of your audience.

Applying Psychological Principles To Data Storytelling

Humans process information based on psychological cues such as cognitive ease, social proof, and emotional appeal. By incorporating these principles, data storytellers can make their narratives more engaging, memorable, and persuasive.

Psychological principles help data storytellers tap into how people perceive, interpret, and remember information.

The Theory of Planned Behavior

While there is no single truth when it comes to how human behavior is created or changed, it is important for a data storyteller to use a theoretical framework to ensure they address the appropriate psychological factors of their audience. The Theory of Planned Behavior (TPB) is a commonly cited theory of behavior change in academic psychology research and courses. It’s useful for creating a reasonably effective framework to collect audience data and build a data story around it.

The TPB (Ajzen 1991) (Figure 5) aims to predict and explain human behavior. It consists of three key components:

- Attitude

This refers to the degree to which a person has a favorable or unfavorable evaluation of the behavior in question. An example of attitudes in the TPB is a person’s belief about the importance of regular exercise for good health. If an individual strongly believes that exercise is beneficial, they are likely to have a favorable attitude toward engaging in regular physical activity. - Subjective Norms

These are the perceived social pressures to perform or not perform the behavior. Keeping with the exercise example, this would be how a person thinks their family, peers, community, social media, and others perceive the importance of regular exercise for good health. - Perceived Behavioral Control

This component reflects the perceived ease or difficulty of performing the behavior. For our physical activity example, does the individual believe they have access to exercise in terms of time, equipment, physical capability, and other potential aspects that make them feel more or less capable of engaging in the behavior?

As shown in Figure 5, these three components interact to create behavioral intentions, which are a proxy for actual behaviors that we often don’t have the resources to measure in real-time with research participants (Ajzen, 1991).

UX researchers and data storytellers should develop a working knowledge of the TPB or another suitable psychological theory before moving on to measure the audience’s attitudes, norms, and perceived behavioral control. We have included additional resources to support your learning about the TPB in the references section of this article.

How To Understand Your Audience And Apply Psychological Principles

OK, we’ve covered the importance of audience understanding and psychology. These two principles serve as the foundation of the proposed model of storytelling we’re putting forth. Let’s explore how to integrate them into your storytelling process.

Introducing The Audience Research Informed Data Storytelling Model (ARIDSM)

At the core of successful data storytelling lies a deep understanding of your audience’s psychology. Here’s a five-step process to integrate UX research and psychological principles effectively into your data stories:

Step 1: Define Clear Objectives

Before diving into data, it’s crucial to establish precisely what you aim to achieve with your story. Do you want to inform, persuade, or inspire action? What specific message do you want your audience to take away?

Why it matters: Defining clear objectives provides a roadmap for your storytelling journey. It ensures that your data, narrative, and visuals are all aligned toward a common goal. Without this clarity, your story risks becoming unfocused and losing its impact.

How to execute Step 1: Start by asking yourself:

- What is the core message I want to convey?

- What do I want my audience to think, feel, or do after experiencing this story?

- How will I measure the success of my data story?

Frame your objectives using action verbs and quantifiable outcomes. For example, instead of “raise awareness about climate change,” aim to “persuade 20% of the audience to adopt one sustainable practice.”

Example:

Imagine you’re creating a data story about employee burnout. Your objective might be to convince management to implement new policies that promote work-life balance, with the goal of reducing reported burnout cases by 15% within six months.

Step 2: Conduct UX Research To Understand Your Audience

This step involves gathering insights about your audience: their demographics, needs, motivations, pain points, and how they prefer to consume information.

Why it matters: Understanding your audience is fundamental to crafting a story that resonates. By knowing their preferences and potential biases, you can tailor your narrative and data presentation to capture their attention and ensure the message is clearly understood.

How to execute Step 2: Employ UX research methods like surveys, interviews, persona development, and testing the message with potential audience members.

Example:

If your data story aims to encourage healthy eating habits among college students, your research might conduct a survey of students to determine what types of attitudes exist towards specific types of healthy foods for eating, to apply that knowledge in your data story.

Step 3: Analyze and Select Relevant Audience Data

This step bridges the gap between raw data and meaningful insights. It involves exploring your data to identify patterns, trends, and key takeaways that support your objectives and resonate with your audience.

Why it matters: Careful data analysis ensures that your story is grounded in evidence and that you’re using the most impactful data points to support your narrative. This step adds credibility and weight to your story, making it more convincing and persuasive.

How to execute Step 3:

- Clean and organize your data.

Ensure accuracy and consistency before analysis. - Identify key variables and metrics.

This will be determined by the psychological principle you used to inform your research. Using the TPB, we might look closely at how we measured social norms to understand directionally how the audience perceives social norms around the topic of the data story you are sharing, allowing you to frame your call to action in ways that resonate with these norms. You might run a variety of statistics at this point, including factor analysis to create groups based on similar traits, t-tests to determine if averages on your measurements are significantly different between groups, and correlations to see if there might be an assumed direction between scores on various items.

Example:

If your objective is to demonstrate the effectiveness of a new teaching method, analyzing how your audience perceives their peers to be open to adopting new methods, their belief that they are in control over the decision to use a new teaching method, and their attitude towards the effectiveness of their current teaching methods to create groups that have various levels of receptivity in trying new methods, allowing you to later tailor your data story for each group.

Step 4: Apply The Theory of Planned Behavior Or Your Psychological Principle Of Choice [Done Simultaneous With Step 3]

In this step, you will see that The Theory of Planned Behavior (TPB) provides a robust framework for understanding the factors that drive human behavior. It posits that our intentions, which are the strongest predictors of our actions, are shaped by three core components: attitudes, subjective norms, and perceived behavioral control. By consciously incorporating these elements into your data story, you can significantly enhance its persuasive power.

Why it matters: The TPB offers valuable insights into how people make decisions. By aligning your narrative with these psychological drivers, you increase the likelihood of influencing your audience’s intentions and, ultimately, their behavior. This step adds a layer of strategic persuasion to your data storytelling, making it more impactful and effective.

How to execute Step 4:

Here’s how to leverage the TPB in your data story:

Influence Attitudes: Present data and evidence that highlight the positive consequences of adopting the desired behavior. Frame the behavior as beneficial, valuable, and aligned with the audience’s values and aspirations.

This is where having a deep knowledge of the audience is helpful. Let’s imagine you are creating a data story on exercise and your call to action promoting exercise daily. If you know your audience has a highly positive attitude towards exercise, you can capitalize on that and frame your language around the benefits of exercising, increasing exercise, or specific exercises that might be best suited for the audience. It’s about framing exercise not just as a physical benefit but as a holistic improvement to their life. You can also tie it to their identity, positioning exercise as an integral part of living the kind of life they aspire to.

Shape Subjective Norms: Demonstrate that the desired behavior is widely accepted and practiced by others, especially those the audience admires or identifies with. Knowing ahead of time if your audience thinks daily exercise is something their peers approve of or engage in will allow you to shape your messaging accordingly. Highlight testimonials, success stories, or case studies from individuals who mirror the audience’s values.

If you were to find that the audience does not consider exercise to be normative amongst peers, you would look for examples of similar groups of people who do exercise. For example, if your audience is in a certain age group, you might focus on what data you have that supports a large percentage of those in their age group engaging in exercise.

Enhance Perceived Behavioral Control: Address any perceived barriers to adopting the desired behavior and provide practical solutions. For instance, when promoting daily exercise, it’s important to acknowledge the common obstacles people face — lack of time, resources, or physical capability — and demonstrate how these can be overcome.

Step 5: Craft A Balanced And Persuasive Narrative

This is where you synthesize your data, audience insights, psychological principles (including the TPB), and storytelling techniques into a compelling and persuasive narrative. It’s about weaving together the logical and emotional elements of your story to create an experience that resonates with your audience and motivates them to act.

Why it matters: A well-crafted narrative transforms data from dry statistics into a meaningful and memorable experience. It ensures that your audience not only understands the information but also feels connected to it on an emotional level, increasing the likelihood of them internalizing the message and acting upon it.

How to execute Step 5:

Structure your story strategically: Use a clear narrative arc that guides your audience through the information. Begin by establishing the context and introducing the problem, then present your data-driven insights in a way that supports your objectives and addresses the TPB components. Conclude with a compelling call to action that aligns with the attitudes, norms, and perceived control you’ve cultivated throughout the narrative.

Example:

In a data story about promoting exercise, you could:

- Determine what stories might be available using the data you have collected or obtained. In this example, let’s say you work for a city planning office and have data suggesting people aren’t currently biking as frequently as they could, even if they are bike owners.

- Begin with a relatable story about lack of exercise and its impact on people’s lives. Then, present data on the benefits of cycling, highlighting its positive impact on health, socializing, and personal feelings of well-being (attitudes).

- Integrate TPB elements: Showcase stories of people who have successfully incorporated cycling into their daily commute (subjective norms). Provide practical tips on bike safety, route planning, and finding affordable bikes (perceived behavioral control).

- Use infographics to compare commute times and costs between driving and cycling. Show maps of bike-friendly routes and visually appealing images of people enjoying cycling.

- Call to action: Encourage the audience to try cycling for a week and provide links to resources like bike share programs, cycling maps, and local cycling communities.

Evaluating The Method

Our next step is to test our hypothesis that incorporating audience research and psychology into creating a data story will lead to more powerful results. We have conducted preliminary research using messages focused on climate change, and our results suggest some support for our assertion.

We purposely chose a controversial topic because we believe data storytelling can be a powerful tool. If we want to truly realize the benefits of effective data storytelling, we need to focus on topics that matter. We also know that academic research suggests it is more difficult to shift opinions or generate behavior around topics that are polarizing (at least in the US), such as climate change.

We are not ready to share the full results of our study. We will share those in an academic journal and in conference proceedings. Here is a look at how we set up the study and how you might do something similar when either creating a data story using our method or doing your own research to test our model. You will see that it closely aligns with the model itself, with the added steps of testing the message against a control message and taking measurements of the actions the message(s) are likely to generate.

Step 1: We chose our topic and the data set we wanted to explore. As I mentioned, we purposely went with a polarizing topic. My academic background was in messaging around conservation issues, so we explored that. We used data from a publicly available data set that states July 2023 was the hottest month ever recorded.

Step 2: We identified our audience and took basic measurements. We decided our audience would be members of the general public who do not have jobs working directly with climate data or other relevant fields for climate change scientists.

We wanted a diverse range of ages and backgrounds, so we screened for this in our questions on the survey to measure the TPB components as well. We created a survey to measure the elements of the TPB as it relates to climate change and administered the survey via a Google Forms link that we shared directly, on social media posts, and in online message boards related to topics of climate change and survey research.

Step 3: We analyzed our data and broke our audience into groups based on key differences. This part required a bit of statistical know-how. Essentially, we entered all of the responses into a spreadsheet and ran a factor analysis to define groups based on shared attributes. In our case, we found two distinct groups for our respondents. We then looked deeper into the individual differences between the groups, e.g., group 1 had a notably higher level of positive attitude towards taking action to remediate climate change.

Step 4 [remember this happens simultaneously with step 3]: We incorporated aspects of the TPB in how we framed our data analysis. As we created our groups and looked at the responses to the survey, we made sure to note how this might impact the story for our various groups. Using our previous example, a group with a higher positive attitude toward taking action might need less convincing to do something about climate change and more information on what exactly they can do.

Table 1 contains examples of the questions we asked related to the TPB. We used the guidance provided here to generate the survey items to measure the TPB related to climate change activism. Note that even the academic who created the TPB states there are no standardized questions (PDF) validated to measure the concepts for each individual topic.

| Item | Measures | Scale |

|---|---|---|

| How beneficial do you believe individual actions are compared to systemic changes (e.g., government policies) in tackling climate change? | Attitude | 1 to 5 with 1 being “not beneficial” and 5 being “extremely beneficial” |

| How much do you think the people you care about (family, friends, community) expect you to take action against climate change? | Subjective Norms | 1 to 5 with 1 being “they do not expect me to take action” and 5 being “they expect me to take action” |

| How confident are you in your ability to overcome personal barriers when trying to reduce your environmental impact? | Perceived Behavioral Control | 1 to 5 with 1 being “not at all confident” and 5 being “extremely confident” |

Table 1: Examples of questions we used to measure the TPB factors. We asked multiple questions for each factor and then generated a combined mean score for each component.

Step 5: We created data stories aligned with the groups and a control story. We created multiple stories to align with the groups we identified in our audience. We also created a control message that lacked substantial framing in any direction. See below for an example of the control data story (Figure 7) and one of the customized data stories (Figure 8) we created.

Step 6: We released the stories and took measurements of the likelihood of acting. Specific to our study, we asked the participants how likely they were to “Click here to LEARN MORE.” Our hypothesis was that individuals would express a notably higher likelihood to want to click to learn more on the data story aligned with their grouping, as compared to the competing group and the control group.

Step 7: We analyzed the differences between the preexisting groups and what they stated was their likelihood of acting. As I mentioned, our findings are still preliminary, and we are looking at ways to increase our response rate so we can present statistically substantiated findings. Our initial findings are that we do see small differences between the responses to the tailored data stories and the control data story. This is directionally what we would be expecting to see. If you are going to conduct a similar study or test out your messages, you would also be looking for results that suggest your ARIDS-derived message is more likely to generate the expected outcome than a control message or a non-tailored message.

Overall, we feel there is an exciting possibility and that future research will help us refine exactly what is critical about generating a message that will have a positive impact on your audience. We also expect there are better models of psychology to use to frame your measurements and message depending on the audience and topic.

For example, you might feel Maslow’s hierarchy of needs is more relevant to your data storytelling. You would want to take measurements related to these needs from your audience and then frame the data story using how a decision might help meet their needs.

Elevate Your Data Storytelling

Traditional models of data storytelling, while valuable, often fall short of effectively engaging and persuading audiences. This is primarily due to their neglect of crucial aspects such as audience understanding and the application of psychological principles. By incorporating these elements into the data storytelling process, we can create more impactful and persuasive narratives.

The five-step framework proposed in this article — defining clear objectives, conducting UX research, analyzing data, applying psychological principles, and crafting a balanced narrative — provides a roadmap for creating data stories that resonate with audiences on both a cognitive and emotional level. This approach ensures that data is not merely presented but is transformed into a meaningful experience that drives action and fosters change. As data storytellers, embracing this human-centric approach allows us to unlock the full potential of data and create narratives that truly inspire and inform.

Effective data storytelling isn’t a black box. You can test your data stories for effectiveness using the same research process we are using to test our hypothesis as well. While there are additional requirements in terms of time as a resource, you will make this back in the form of a stronger impact on your audience when they encounter your data story if it is shown to be significantly greater than the impact of a control message or other messages you were considering that don’t incorporate the psychological traits of your audience.

Please feel free to use our method and provide any feedback on your experience to the author.

(yk)

How To Test And Measure Content In UX

How To Test And Measure Content In UX How To Test And Measure Content In UX Vitaly Friedman 2025-02-13T08:00:00+00:00 2025-03-04T21:34:45+00:00 Content testing is a simple way to test the clarity and understanding of the content on a page — be it a paragraph of text, […]

Ux

How To Test And Measure Content In UX

Vitaly Friedman 2025-02-13T08:00:00+00:00

2025-03-04T21:34:45+00:00

Content testing is a simple way to test the clarity and understanding of the content on a page — be it a paragraph of text, a user flow, a dashboard, or anything in between. Our goal is to understand how well users actually perceive the content that we present to them.

It’s not only about finding pain points and things that cause confusion or hinder users from finding the right answer on a page but also about if our content clearly and precisely articulates what we actually want to communicate.

.course-intro{–shadow-color:206deg 31% 60%;background-color:#eaf6ff;border:1px solid #ecf4ff;box-shadow:0 .5px .6px hsl(var(–shadow-color) / .36),0 1.7px 1.9px -.8px hsl(var(–shadow-color) / .36),0 4.2px 4.7px -1.7px hsl(var(–shadow-color) / .36),.1px 10.3px 11.6px -2.5px hsl(var(–shadow-color) / .36);border-radius:11px;padding:1.35rem 1.65rem}@media (prefers-color-scheme:dark){.course-intro{–shadow-color:199deg 63% 6%;border-color:var(–block-separator-color,#244654);background-color:var(–accent-box-color,#19313c)}}

This article is part of our ongoing series on UX. You can find more details on design patterns and UX strategy in Smart Interface Design Patterns 🍣 — with live UX training coming up soon. Free preview.

Banana Testing

A great way to test how well your design matches a user’s mental model is Banana Testing. We replace all key actions with the word “Banana,” then ask users to suggest what each action could prompt.

Not only does it tell you if key actions are understood immediately and if they are in the right place but also if your icons are helpful and if interactive elements such as links or buttons are perceived as such.

Content Heatmapping

One reliable technique to assess content is content heatmapping. The way we would use it is by giving participants a task, then asking them to highlight things that are clear or confusing. We could define any other dimensions or style lenses as well: e.g., phrases that bring more confidence and less confidence.

Then we map all highlights into a heatmap to identify patterns and trends. You could run it with print-outs in person, but it could also happen in Figjam or in Miro remotely — as long as your tool of choice has a highlighter feature.

Run Moderated Testing Sessions

These little techniques above help you discover content issues, but they don’t tell you what is missing in the content and what doubts, concerns, and issues users have with it. For that, we need to uncover user needs in more detail.

Too often, users say that a page is “clear and well-organized,” but when you ask them specific questions, you notice that their understanding is vastly different from what you were trying to bring into spotlight.

Such insights rarely surface in unmoderated sessions — it’s much more effective to observe behavior and ask questions on the spot, be it in person or remote.

Test Concepts, Not Words

Before testing, we need to know what we want to learn. First, write up a plan with goals, customers, questions, script. Don’t tweak words alone — broader is better. In the session, avoid speaking aloud as it’s usually not how people consume content. Ask questions and wait silently.

After the task is completed, ask users to explain the product, flow, and concepts to you. But: don’t ask them what they like, prefer, feel, or think. And whenever possible, avoid the word “content” in testing as users often perceive it differently.

Choosing The Right Way To Test

There are plenty of different tests that you could use:

- Banana test 🍌

Replace key actions with “bananas,” ask to explain. - Cloze test 🕳️

Remove words from your copy, ask users to fill in the blanks. - Reaction cards 🤔

Write up emotions on 25 cards, ask users to choose. - Card sorting 🃏

Ask users to group topics into meaningful categories. - Highlighting 🖍️

Ask users to highlight helpful or confusing words. - Competitive testing 🥊

Ask users to explain competitors’ pages.

When choosing the right way to test, consider the following guidelines:

- Do users understand?

Interviews, highlighting, Cloze test - Do we match the mental model?

Banana testing, Cloze test - What word works best?

Card sorting, A/B testing, tree testing - Why doesn’t it work?

Interviews, highlighting, walkthroughs - Do we know user needs?

Competitive testing, process mapping

Wrapping Up

In many tasks, there is rarely anything more impactful than the careful selection of words on a page. However, it’s not only the words alone that are being used but the voice and tone that you choose to communicate with customers.

Use the techniques above to test and measure how well people perceive content but also check how they perceive the end-to-end experience on the site.

Quite often, the right words used incorrectly on a key page can convey a wrong message or provide a suboptimal experience. Even though the rest of the product might perform remarkably well, if a user is blocked on a critical page, they will be gone before you even blink.

Useful Resources

- Practical Guide To Content Testing, by Intuit

- How To Test Content With Users, by Kate Moran

- Five Fun Ways To Test Words, by John Saito

- A Simple Technique For Evaluating Content, by Pete Gale

New: How To Measure UX And Design Impact

Meet Measure UX & Design Impact (8h), a new practical guide for designers and UX leads to measure and show your UX impact on business. Use the code 🎟 IMPACT to save 20% off today. Jump to the details.

Video + UX Training

$ 495.00 $ 799.00

Get Video + UX Training

25 video lessons (8h) + Live UX Training.

100 days money-back-guarantee.

Video only

25 video lessons (8h). Updated yearly.

Also available as a UX Bundle with 2 video courses.

(cm, vf, il)

The Role Of Illustration Style In Visual Storytelling

The Role Of Illustration Style In Visual Storytelling The Role Of Illustration Style In Visual Storytelling Thomas Bohm 2025-01-14T08:00:00+00:00 2025-03-04T21:34:45+00:00 Illustration has been used for 10,000 years. One of the first ever recorded drawings was of a hand silhouette found in Spain, that is more […]

Ux

The Role Of Illustration Style In Visual Storytelling

Thomas Bohm 2025-01-14T08:00:00+00:00

2025-03-04T21:34:45+00:00

Illustration has been used for 10,000 years. One of the first ever recorded drawings was of a hand silhouette found in Spain, that is more than 66,000 years old. Fast forward to the introduction of the internet, around 1997, illustration has gradually increased in use. Popular examples of this are Google’s daily doodles and the Red Bull energy drink, both of which use funny cartoon illustrations and animations to great effect.

Typically, illustration was done using pencils, chalk, pens, etchings, and paints. But now everything is possible — you can do both analog and digital or mixed media styles.

As an example, although photography might be the most popular method to communicate visuals, it is not automatically the best default solution. Illustration offers a wider range of styles that help companies engage and communicate with their audience. Good illustrations create a mood and bring to life ideas and concepts from the text. To put it another way, visualisation.

Good illustrations can also help give life to information in a better way than just using text, numbers, or tables.

How do we determine what kind of illustration or style would be best? How should illustration complement or echo your corporate identity? What will your main audience prefer? What about the content, what would suit and highlight the content best, and how would it work for the age range it is primarily for?

Before we dive into the examples, let’s discuss the qualities of good illustration and the importance of understanding your audience. The rubric below will help you make good choices for your audience’s benefit.

What Makes A Good Illustration

- Visualises something from the content (something that does not exist or has been described but not visualised).

- Must be aesthetically pleasing, interesting, and stimulating to look at (needs to have qualities and harmonies between colour, elements, proportions, and subject matter).

- Must have a feel, mood, dramatic edge, or attitude (needs to create a feeling and describe or bring to life an environment).

- The illustration should enhance and bring to life what is described in text and word form.

- Explains or unpacks what is written in any surrounding text and makes it come to life in an unusual and useful way (the illustration should complement and illuminate the content so readers better understand the content).

Just look at what we are more often than not presented with.

The importance of knowing about different audiences

It is really important to know and consider different audiences. Not all of us are the same and have the same physical, cognitive, education, or resources. Our writing, designs, and illustrations need to take into account users’ make-up and capabilities.

There are some common categories of audiences:

- Child,

- Teenager,

- Middle-aged,

- Ageing,

- Prefer a certain style (goth, retro, modern, old fashioned, sporty, branded).

Below are interesting examples of illustrations, in no particular order, that show how different styles communicate and echo different qualities and affect mood and tone.

Watercolour

Good for formal, classy, and sophisticated imagery that also lends itself to imaginative expression. It is a great example of texture and light that delivers a really humane and personal feel that you would not get automatically by using software.

Strengths

- Feeling, emotion, and sense of depth and texture.

Drawing With Real-life objects

A great option for highly abstract concepts and compositions with a funny, unusual, and unreal aspect. You can do some really striking and clever stuff with this style to engage readers in your content.

Strengths

- Conceptual play.

Surreal Photomontage

Perfect for abstract hybrid illustration and photo illustration with a surreal fantasy aspect. This is a great example of merging different imagery together to create a really dramatic, scary, and visually arresting new image that fits the musician’s work as well.

Strengths

- Conceptual mixing and merging, leading to new unseen imagery.

Cartoon

Well-suited for showing fun or humorous aspects, creating concepts with loads of wit and cleverness. New messages and forms of communication can be created with this style.

Strengths

- Conceptual.

Cartoon With Block Colour

Works well for showing fun, quirky, or humorous aspects and concepts, often with loads of wit and cleverness. The simplicity of style can be quite good for people who struggle with more advanced imagery concepts, making it quite accessible.

Strengths

- Simplicity and unclutteredness.

Clean Vector

Designed for clean and clear illustrations that are all-encompassing and durable. Due to the nature of this illustration style, it works quite well for a wide range of people as it is not overly stylistic in one direction or another.

Strengths

- Realism, conceptual, and widely pleasing.

Textured Vintage Clean Vector

Best suited for imagining rustic imagery, echoing a vintage feel. This a great example of how texture and non-cleanliness can create and enhance the feeling of the imagery; it is very Western and old-fashioned, perfect for the core meaning of the illustration.

Strengths

- Aged feeling and rough impression.

Pictogram

Highly effective for clean, legible, quickly recognizable imagery and concepts, especially at small sizes as well. It is no surprise that many pictograms are to be seen in quick viewing environments such as airports and show imagery that has to work for a wide range of people.

Strengths

- Legibility, speed of comprehension (accessibility).

Abstract Geometric

A great option for visually attractive and abstract imagery and concepts. This style lends itself to much customising and experimentation from the illustrator, giving some really cool and visually striking results.

Strengths

- Visual stimulation and curiosity.

Lithography Etching

Ideal for imagery that has an old, historic, and traditional feel. Has a great feel achieved through sketchy markings, etchings, and a greyscale colour palette. You would not automatically get this from software, but given the right context or maybe an unusual juxtaposed context (like the clash against a modern, clean, fashionable corporate identity), it could work really well.

Strengths

- Realism and old tradition.

3D gradient

It serves as a great choice for highly realistic illustration with a friendly, widely accessible character element. This style is not overly stylistic and lends itself to being accepted by a wider range of people.

Strengths

- Widely acceptable and appropriate.

Sci-fi Comic Book And Pop Art

It’s especially useful for high-impact, bright, animated, and colourful concepts. Some really cool, almost animated graphic communication can be created with this style, which can also be put to much humorous use. The boldness and in-your-face style promote visual engagement.

Strengths

- Animation.

Tatoo

Well-suited for bold block-coloured silhouettes and imagery. It is so bold and impactful, and there is still loads of detail there, creating a really cool and sharp illustration. The illustration works well in black and white and would be further enhanced with colour.

Strengths

- Directness and clarity.

Pencil

Perfect for humane, detailed imagery with plenty of feeling and character. The sketchy style highlights unusual details and lends itself to an imaginative feeling and imagery.

Strengths

- Humane and detailed imaginative feeling.

Gradient

Especially useful for highly imaginative and fantasy imagery. By using gradients and a light-to-dark color palette, the imagery really has depth and says, ‘Take me away on a journey.’

Strengths

- Fantasy (through depth of colour) and clean feeling.

Charcoal

It makes an excellent option for giving illustration a humane and tangible feel, with echoes of old historical illustrations. The murky black-and-white illustration really has an atmosphere to it.

Strengths

- Humane and detailed feeling.

Woodcut

It offers great value for block silhouette imagery that has presence, sharpness, and impact. Is colour even needed? The black against the light background goes a long way to communicating the imagery.

Strengths

- Striking and clear.

Fashion

A great option for imagery that has motion and flare to it, with a slight feminine feel. No wonder this style of illustration is used for fashion illustrations, great for expressing lines and colours with motion, and has a real fashion runway flare.

Strengths

- Motion and expressive flare.

Caricature

Ideal for humorous imagery and illustration with a graphic edge and clarity. The layering of light and dark elements really creates an illustration with depth, perfect for playing with the detail of the character, not something you would automatically get from a clean vector illustration. It has received more thought and attention than clean vector illustration typically does.

Strengths

- Detail and humour.

Paint

It serves as a great choice for traditional romantic imagery that has loads of detail, texture, and depth of feeling. The rose flowers are a good example of this illustration style because they have so much detail and colour shades.

Strengths

- Tradition and emotions.

Chalk

Well-suited for highly sketchy imagery to make something an idea or working concept. The white lines against the black background have an almost animated effect and give the illustrations real movement and life. This style is a good example of using pure lines in illustration but to great effect.

Strengths

- Hand-realised and animation.

Illustration Sample Card

How To Start Doing Illustration

There are plenty of options, such as using pencils, chalk, pens, etchings, and paints, then possibly scanning in. You can also use software like Illustrator, Photoshop, Procreate, Corel Painter, Sketch, Inkscape, or Figma. But no matter what tools you choose, there’s one essential ingredient you’ll always need, and that is a mind and vision for illustration.

Recommended Resources

- Association of Illustrators

- “20 Best Illustration Agents In The UK, And The Awesome Illustrators They Represent,” Tom May

- It’s Nice That

- Behance Illustration

(yk)

9 Best WordPress Themes for 2025 (Free and Paid)

When it comes to building a WordPress website that doesn’t just look good today but can also hold its own tomorrow, staying power becomes paramount. For Hongkiat.com readers-web designers, developers, and creatives who value innovation-this is especially true. If a WordPress theme doesn’t look 2025-ready, […]

WordpressWhen it comes to building a WordPress website that doesn’t just look good today but can also hold its own tomorrow, staying power becomes paramount.

For Hongkiat.com readers-web designers, developers, and creatives who value innovation-this is especially true.

If a WordPress theme doesn’t look 2025-ready, doesn’t offer built-in flexibility, or hasn’t been actively maintained, it’s bound to cause headaches down the road.

Whichever design or theme you choose should be able to evolve alongside your business (or side project), not hold it back.

But with 5,000+ free and paid WordPress themes (and counting) on the market, it’s easy to feel lost.

So which ones really shine if you aim to stay ahead of the curve?

Below, we’ll take a look at the best WordPress themes (free and paid) in 2025-each one tested, refined, and backed by robust design capabilities.

The Best WordPress Themes for 2025 Include:

These themes feature intuitive page builders, beautiful designs, and the flexibility that developers crave. If you’re looking to streamline your workflow while ensuring your sites look next-level, read on.

Key Takeaways

- Focus on Future-Proofing: Themes must be actively updated and sport a contemporary look. That way, you won’t need to rebuild your site a year from now just because the theme is stuck in 2019.

- Thousands of Options-But Only a Few Will Do: With so many WordPress themes available, the real standouts for 2025 are those like UiCore PRO and Betheme, thanks to their extensive feature sets and design adaptability.

- Look for Developer-Friendly Features: A good theme in 2025 isn’t just about drag-and-drop. It’s about customization, easy mobile editing, high performance, and reliable support-all crucial for developers managing multiple sites or advanced features.

- Why These Themes Shine: Themes like UiCore PRO offer niche benefits such as agency-focused structures, while something like Betheme is famed for its multipurpose approach. Each suits a slightly different developer need, so you can choose based on your unique project.

- Support & Feedback: Themes with dedicated support-like Litho-often spark glowing reviews. This makes a difference when troubleshooting complex builds or rolling out advanced features.

What Sets These WordPress Themes Apart?

These top themes share defining traits that can streamline your development process and enhance your site’s UX.

- Ease of Use: Pre-built demos are fantastic, but only if they’re simple to edit. If you’re spending hours in a confusing backend, that’s a red flag. Themes highlighted here pride themselves on intuitive interfaces and well-documented builder tools.

- Multiple Builder Options: From WordPress’s native block editor (Gutenberg) to powerhouse plugins like Elementor, different developers have different preferences. These themes typically support multiple major page builders, ensuring you don’t have to alter your workflow.

- Flexible Customization: These best WordPress themes for 2025 come loaded with website demos, yet remain highly flexible. Tweak layouts, adjust color schemes, or integrate custom scripts-whatever your vision, you won’t be locked into a cookie-cutter style.

- Forward-Thinking Design: “2025-ready” means not just looking modern but ensuring sites can adapt to future design trends. The multipurpose demos included with each theme should remain fresh and relevant for years to come.

- Mobile Editing: A significant portion of web traffic comes from mobile devices. While nearly all top-tier themes boast responsive design, the page builder’s mobile editing features are vital. You need an easy way to refine how your site appears on various screen sizes.

- Performance: If a theme or builder loads slowly, everyone loses-developers spend more time waiting, and visitors bounce. Each theme here scores well on performance tests, so you can focus on building your site rather than dealing with speed issues.

- Reliable Customer Support: Even pro developers appreciate a guiding hand when deadlines loom. Whether it’s a ticket-based system, knowledge base, or community forum, these themes are backed by active support teams.

Quick Reality Check

It’s tempting to think finding a perfectly matched theme is a walk in the park. While the process can be straightforward with proper research, choosing a future-ready theme is crucial to avoid unexpected redesigns. Keep these points in mind:

- Make a Strong First Impression: Your site should look professional and stand out in a crowded online space. Every theme mentioned here can help you achieve that when used effectively.

- Future Readiness Is Non-Negotiable: As web standards shift, so do theme requirements. A theme that’s frequently updated and built on flexible code can evolve right along with your business-or your personal brand.

The Themes at a Glance

In creating this list, we considered:

- Performance & Adaptability

- Developer Tools

- Business Owner Requirements for 2025 and Beyond

All of these future-proof themes feature clean code, top-notch responsiveness, and SEO-ready structures.

Why These Themes Excel

- Performance: Comprehensive demo libraries to launch site projects quickly

- Ease of Use: Intuitive drag-and-drop builders for pages, headers, footers-even WooCommerce

- Adaptability: Design blocks, templates, developer-friendly layouts, and more

- Ongoing Support: Responsive help desks, thorough documentation, and video tutorials for quick problem-solving

Your Next Steps

Based on the context of the article discussing WordPress themes and their features, I’d complete the last point like this:

- Preview Themes & Builders: Take time to explore each theme’s demos. See if their builder tools align with your typical workflow, whether that’s Elementor, WPBakery, or another preferred editor.

- Match Templates to Project Specs: If you want to see how a theme might look for an eCommerce site versus a personal portfolio, explore the pre-built websites and templates. These provide insight into the theme’s range and design capabilities.

- Pick One That Feels Right: Ultimately, the best theme is the one that keeps pace with your vision and offers the right balance of features, customization options, and ease of use for your specific needs.

1. UiCore Pro: WordPress Theme for Gutenberg and Elementor

UiCore Pro’s impressive array of blocks, widgets, and page sections allows you to customize every nook and cranny of your website.

Its standout feature is its huge library of website templates, template blocks, and inner pages. A beautiful example is Slate, a UiCore Pro top 10 downloaded demo in 2024. Slate would provide an ideal template for creating a services-oriented startup. New demos/pre-built websites are added to the existing library of 60+ pre-built websites monthly.

Other features you will love:

- Next-Gen Theme Options: Provides total control over your site’s look and feel

- Theme Builder: Does the same for the static elements of your website

- Premium Widgets: 250+ premium widgets that take the place of plugins you might otherwise need to generate traffic to your site

- Admin Customizer: Allows users to personalize the admin panel’s look and feel to suit their preferences

- White Label Option: Ideal for anyone interested in customizing UiCore Pro to conform to their own brand

Primary users include Agencies, Architects, Online Shop owners, Startups, and SaaS providers.

Current Rating: 4.6 on Trustpilot

2. Betheme: Fastest Theme of Them All

With Betheme it’s possible to build virtually any type or style website quickly. That is good news for busy web designers, web developers and businesses seeking an online presence.

Betheme’s standout feature (one of several) is its outstanding library of 700+ responsive and fully customizable pre-built websites, and each is just a click away. New demos are made available every month.

How would one of these pre-built websites help you get a project off to a quick start? Try the Be Gadget example, a top downloaded demo in 2024. If you are thinking of opening a small online shop you might be able to put it to immediate use.

Other cool features include:

- Superfast BeBuilder: Completely rewritten for enhanced speed and performance, making site building faster than ever

- WooBuilder: Includes 40+ WooCommerce demos, allowing quick and easy creation of online stores

- Customizable Layouts: Offers flexible layout options for portfolio, blog, and shop pages

- Tools for All Users: A Developer Mode and a Query Loop Builder for developers, Popups for Marketers, and a WooCommerce Builder for sellers

- Five-Star Customer Support: Betheme’s customer service center ensures five-star treatment

3. Blocksy: Popular WooCommerce Theme